In July 2025, the Australian Government’s Digital Transformation Agency, released the “Australian Government AI technical standard” version 1. This is meant for the Australian public sector/agencies.

The 102-page document sets a comprehensive framework for public agencies when adopting AI to ensure that it is done safely and ethically.

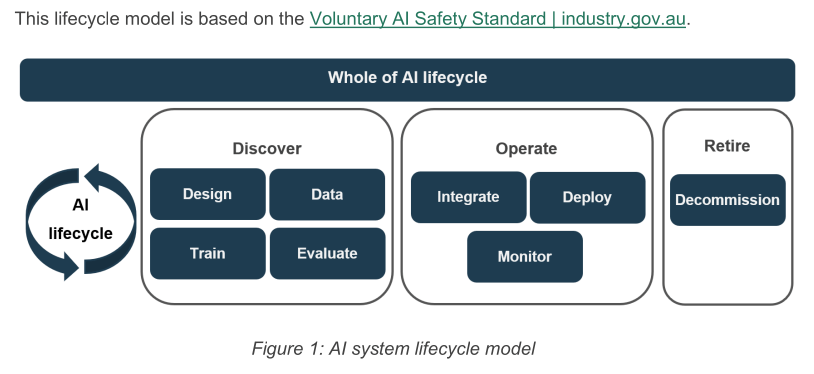

This Standard outlines a structured AI system lifecycle which are set up as three core phases: Discover, Operate, and Retire. These three phases are from the Australian Voluntary AI Safety Standard.

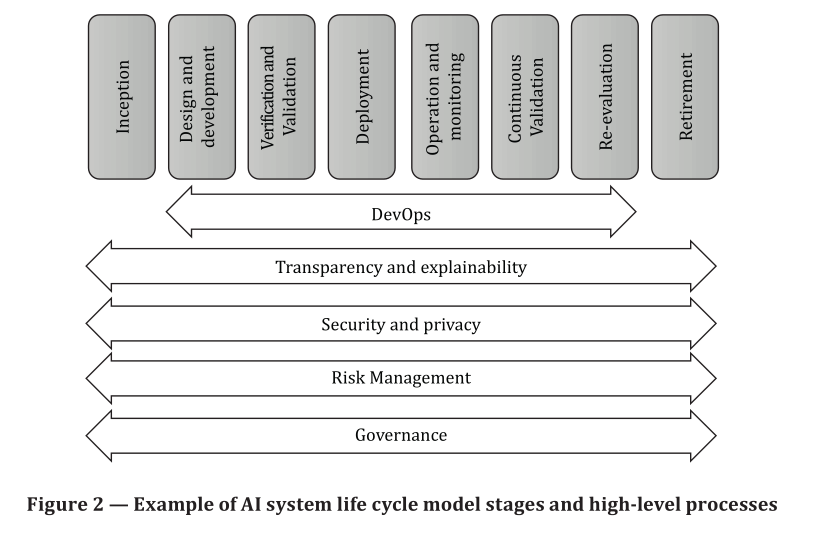

What the standard has done is to group the 8 stages of ISO 5338 into the three stages.

Each stage provides specific technical requirements & guidelines for critical work such as data management, model training, and performance monitoring.

I am glad that the AU document emphasises human-centred approach (Statement 3: Identify and buld people capabities), mandating rigorous testing for bias, transparency, and safety to maintain public trust. Additionally, it also defines various organisational roles and provides a use case assessment. That can be used to determine how the standard applies to different procurement and development models.

The Standard mentions Criterion 8: Mitigate staff over-reliance, under-reliance, and aversion of AI as an important litmus test to building human capabilities.

I like the addition of “ModelOps” by the standard. It is a set of practices and technologies designed to streamline and integrate the lifecycle management of decision models through interdisciplinary approaches and automation tools.

And, while ISO 5338 provides the governance requirements and technical definitions for what a lifecycle must include, in essence, ModelOps provides the practical ideas for Australian agencies to execute those processes securely and at scale.

Version control is noted in the Standard’s Statement 7: Apply version control practices. It does very nicely line up with 5338 about applying configuration management to the models combined with the data used for its training to ensure the application can be reproduced exactly. This can be a difficult thing to achieve, but is needed.

An interesting aspect of the Standard is in Statement 8: Apply watermarking techniques. While I think it is a good idea to find ways to do watermarking, the fact that most forms of digital watermarking will be circumvented. Reference is made to the Coalition for Content Provenance and Authenticity (C2PA), but does recognize that there are many limitations and risks to watermarking.

I have been working on a way to authenticate any image/text one comes across via the use of QR codes and it is a process which is completely negates any attempt at circumvention. I am looking for collaborators (and perhaps investors) to get the working MVP to product stage.

Overall, the Standard is useful and kudos to DTA for putting out the document – and apologies for the late review. The Standard is certainly helpful.

I am pleased that they did include my favourite word when it comes to anythingAI – test. It was mentioned four times in Statements 27-30 and 38.

The Standard’s Statement 39: Establish incident resolution processes is interesting especially when I compare that with Singapore’s “Model AI Governance Framework for Generative AI” and ISO 5338 and the three have distinct differences.

• AU Standard: Features a dedicated (Statement 39) which explicitly requires agencies to define incident handling processes and implement corrective actions.

• The SG Agentic AI framework views incident resolution as part of proactive response and debugging. And suggests that for autonomous agents, “higher-priority” alerts should trigger a temporary halt of execution until a human can assess the incident – essentially a functional safety switch.

• For 5338 it notes that incident resolution can be more complex for AI because system versions do not always reflect the guaranteed behaviour of the operational configuration, especially in continuous learning systems. 5338 recommends offering an automated model rollback process as a specific maintenance task to quickly resolve suboptimal performance.

Footnote: This post was entirely written by me with hints and suggestions from my use of the open source tool, open notebook, running on my systems (Fedora Linux 43 and an AMD GPU). See https://github.com/lfnovo/open-notebook/issues/451 for my shell script that you can use to run on your own Fedora systems using Podman. I created a notebook with the three documents: the Standard, the Agentic AI framework and ISO 5338. I used the open source models from IBM Granite and embedding models also from IBM Granite.