I came across a post on linkedin with the heading “Why CIOs and CTOs Must Rethink Open Source AI: Lesons from DeepSeek’s Security Challenges“.

Yes, that is 100% clickbaity and it makes reference to another clickbait post entitled “Security Threats of Open Source AI Exposed by DeepSeek“.

I’ll just focus on the contents of the two posts to point out how shallow both are.

Let’s start with the second post. I’ll quote portions and respond to them.

While many vulnerabilities and risks of DeepSeek have already been uncovered, there are certain categories of risks that security and compliance professionals must consider when evaluating the use of any open source AI platform within their organizations. For purposes of categorizing risks, there are four discrete elements of generative AI (GenAI) tools, including:

- The platform (user interface, technology infrastructure)

- The “brain” (neural networks, LLM)

- The data going in, namely in the form of plain language queries or prompts

- The data coming out as responses or answers to user prompts

Each of these elements has its own unique risks.

Let’s make it clear that DeepSeek is NOT open source in the way we all understand open source. Portions of their models have open source components that are available, but we have no idea about their training data etc. For all ends and purposes, DeepSeek (like Llama etc) are proprietary freeware. Freeware is software that you can download and use. Some of you have done that with PDF readers like those from Adobe (assuming they are still around). Freeware, in general, only offer you usage of the software but no access to the code used to build it, nor support etc.

There is a link in the quoted text above that brings to another article about how DeepSeek was built and how OpenAI (who used data from the Internet without permission) was accusing DeepSeek of taking OpenAI’s output for training. Yes, OpenAI is a well known bad actor and they have no shame in accusing others of behaving badly like them. That’s another conversation.

Let’s continue with dissecting the second post. It’s goes on to say under a heading “Open Source AI Risks Outweigh Rewards” reproduced partially here:

The “brains of the operation” can introduce unique risks. There are known risks of inaccurate or even dangerous conclusions, including hallucinations, which undermine the validity, credibility, and usability of the model. There may be biases in the “thought process” of a model that perpetuate or exacerbate human biases. Since bias tuning is an essential part of a model’s training process, extra care must be taken to tune neural networks in a manner that drives reasonable, objective, human-like results.

Because open source GenAI tools are not proprietary, there may also be liability risks. Since there is no legal entity responsible for maintaining and updating the tool, are end users liable for all outcomes associated with its use? If an organization uses an open source tool that drives decision-making or customer experiences and something bad happens, how much responsibility will the organization be forced to accept?

Looks at the highlighted sentence above. The author is promoting the false notion that only proprietary code/models/tools might not have liability risks.

That’s being disingenuous. Every model/tool carries risks.

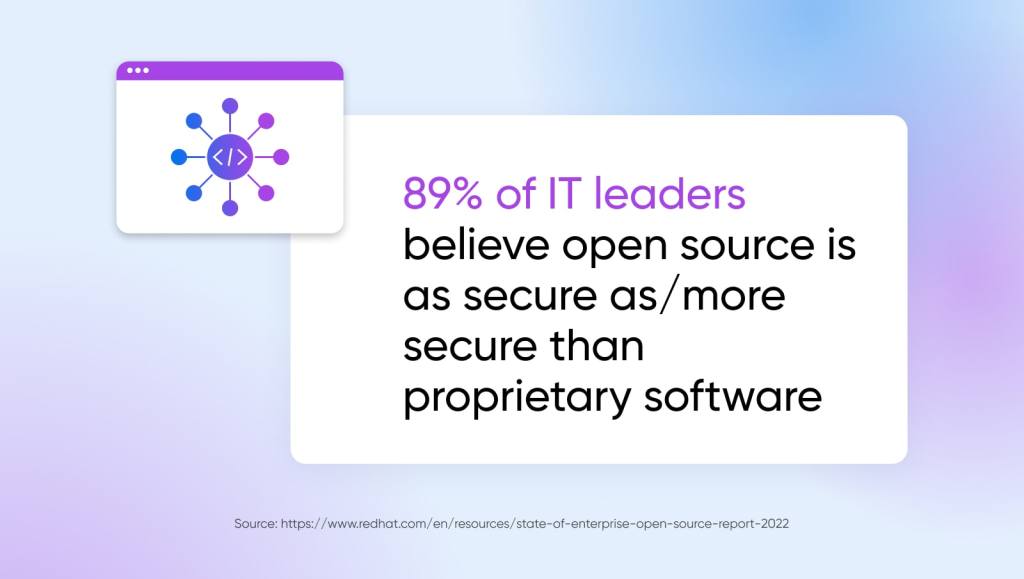

Being open source makes it far easier to find it and fix it and not be dependent on the proprietary vendor to fix. This has been proven time and time again over the last 4 decades and stands as testimony on how the Internet, web and online services flourished – driven by open source tools. It is highly presumptuous for the author to make that claim.

The next sentence in the paragraph says that since there are no legal entities responsible for maintaining and updating the tool and that the organization has to accept responsibility when something goes wrong, it is bad.

I’d pose the following to the author: does any of the software systems that his organization runs and uses have *any* form of warranty? Without him answering, I can categorically say there isn’t.

This is one of the big embarrassing thing about software: use it at your own risk. About the only ones that might have some accountability are software used in medical, nuclear and aviation systems.

Yes, you can have contractual obligations with vendors and penalty clauses etc and that’s about it.

The article goes on to some other, what I’d call myth repeating statements.

It is a pity that the author did not mention or offer the critical distinction that anyone can download and run the proprietary freeware model from DeepSeek and run it locally on one’s own systems.

Try that with OpenAI. OpenAI is really FauxOpenAI – they’ve NOT released their model that one can run locally and nothing about them is open at all.

It would appear that the author is happy to sing praises of models that are delivered purely in a SaaS model – like OpenAI – with the inherent risks that would be similar to usage of DeepSeek via chat.deepseek.com but conveniently leaves out to say that there is a way to securely and privately run DeepSeek without the inherent risks of OpenAI.

Now let me turn to the linkedin post that triggered this response. I do hope that posts like that are critically scrutinized and called out for what is useful and correct and what isn’t.

The only saving grace in that linkedin post is this:

- Block or Isolate Open-Source Models

If exploring open-source models like DeepSeek, run them in isolated test environments:

Use Kubernetes, container security, and network segmentation Prevent internet egress where possible Log every prompt, input, and output for auditing

Yes, as noted above, run these proprietary/freeware models on your own infrastructure. That’s what I have done for all the stuff I need to do.

And, again, open source is never hyphenated.

This is 2025 and the myths of open source continue to surface and it is up to those of us who understand the nuances and issues to keep up with the education and clarification.

Open source washing continues with the usual suspects like Meta etc and DeepSeek is no exception. It is really important to recognise this.

To understand the critical nuances of licensing of open source AI, I’d suggest this talk I gave at FOSSAsia earlier this year.