What is a CPU (Central Processing Unit) and what is a GPU (Graphics Processing Unit)? And why do I care?

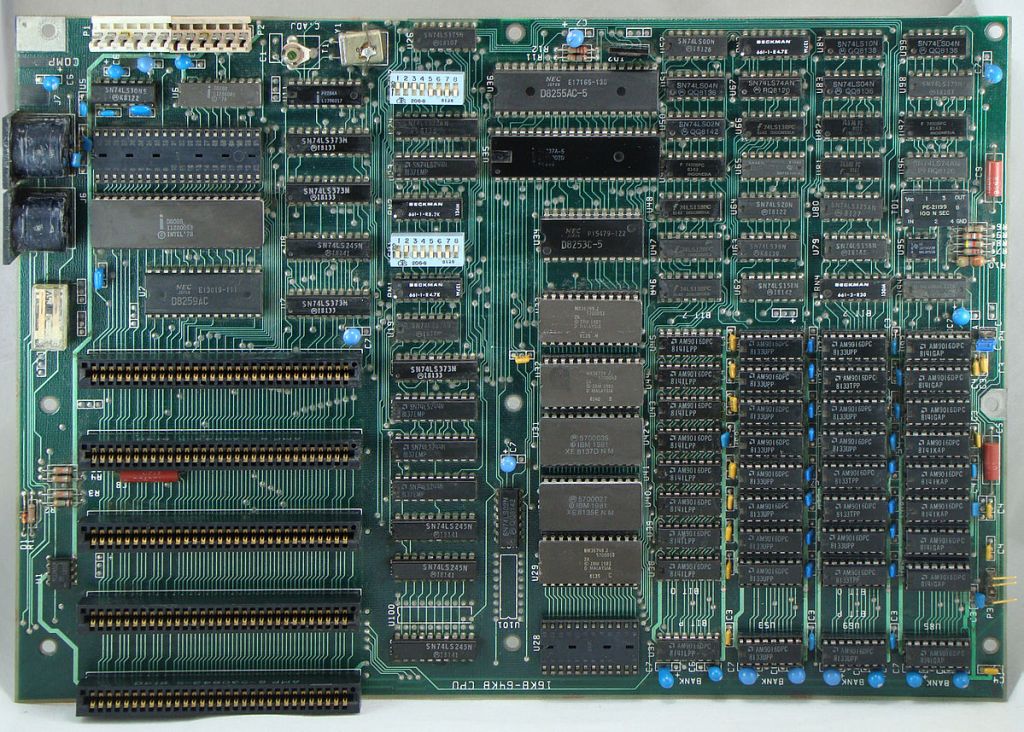

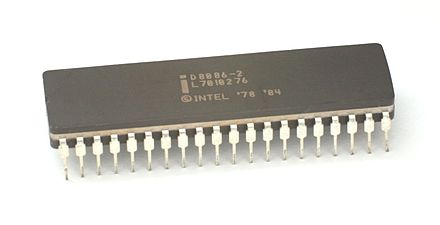

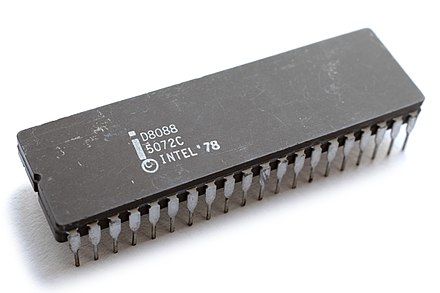

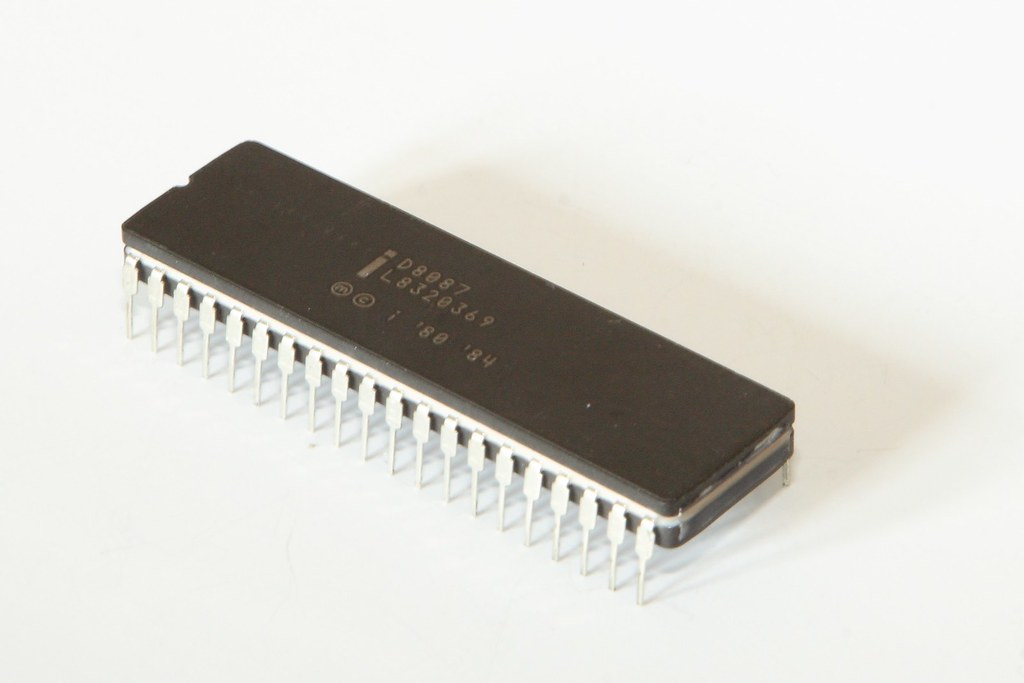

Over 40 years ago, in the days of the Intel 8088 CPU (that was part of the IBM Personal Computer), all computing was done by the CPU. There were computational aspects that could not be done quickly enough though. Especially floating point math, transcendental numberic computations etc. For that, Intel developed the Intel 8087 math co-processor. This chip would take care of all of the more complex mathematical computation and dramatically improve performance.

It is useful to note that the other CPU from Intel, the 8086, also benefited from having the 8087 co-processor as those did not have good math processing capabilities.

Those Intel CPUs and co-processors were all single core devices even if there was some pipelining available. Those CPUs clocked between 5 to 16 MHz and were the workhorse for many users in the 1980s.

As the systems evolved to run applications that are graphically intensive, the need for chips that can handle matrix computation arose.

Why matrix computation?

Matrices are heavily used in graphics because they provide a compact and efficient way to represent and combine transformations like translation, rotation, and scaling. These allow for complex operations to be performed quickly and easily, especially when manipulating multiple points or objects in a scene.

So, if you have a piece of hardware that can do matrix computation more efficiently, use it.

That then gave birth to the concept of the graphics processing unit or GPU.

Like the 8087, the GPU became the co-processor to the CPU.

As it turns out, the matrix computations is also what is needed for computing the hash for the next block on the distributed ledger technology (DLT) systems like bitcoin. That’s why we saw the huge take up and shortage of GPUs during the hype bubble of “bitcoin mining” in the 2010s.

Fast forward a few years, we are in a new AI hype bubble. Matrix computation is very relevant and critical for machine learning. This is to compute the various forward propagation and back propagation values in ML. Such computing is also known as embarrassingly parallelisable compute. This parallel computation is greatly accelerated with the use of multi-core, multi gigabyte GPUs.

Here’s a Myth Buster’s video from 2010 explaining what a serially done work via a CPU is compared with something done in parallel by a CPU (this was shown at an NVIDIA event hence the logo).

Given that GPUs hold a speed advantage over CPUs, this does not mean that every machine learning (and by extension generative AI) training must always need GPUs. Nor does it mean that if you want to use a trained foundation model on your local network, that you need a GPU.

Computational parallelism is almost always the result of the choice of algorithms that one uses. If the algorithms can be sufficiently adjusted to not only utilise the advantages of parallel computation but also, cleverly use CPUs, we can slow down and mitigate the current demand for GPUs.

Some of this mitigation would have to happen in how the CPUs are built. There is a current trend to include NPU (Neural Processing Units) into the CPUs. NPUs are similar to GPUs but simpler in implementaion and hence less complex – and less power hungry.

The existence of a NPU and GPU in a system can be a useful architecture. Should it be the norm? Perhaps.

There are other ways to have the same or similar capabilities of a GPU – using ASICs that are designed for complex computing and also using TPUs (Tensor Processing Units). TPUs are really about running tensors that are part of ML usage and not as general purpose as NPUs and GPUs can be.

Will the likes of Intel, AMD and ARM take up the challenge of getting matrix and related computing capabilities into the CPU? I think they do see the risk/reward opportunity ahead and the NPU is a starting point.

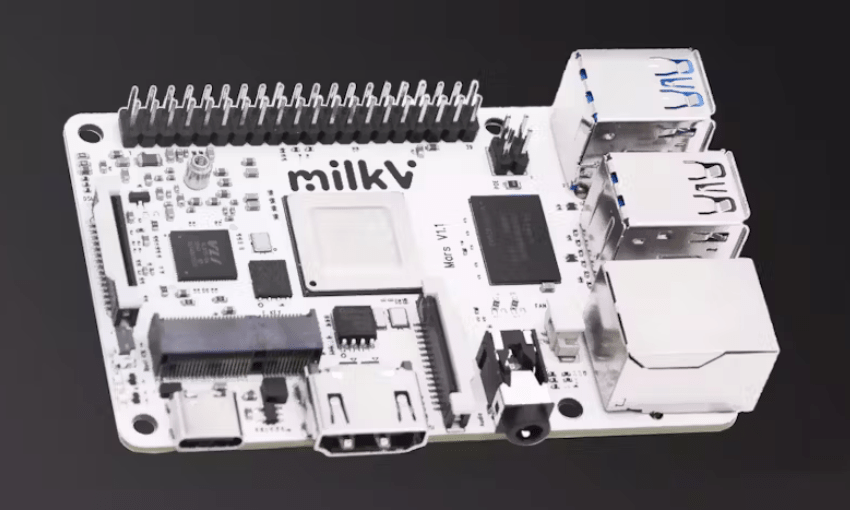

I would not wait for them to start offering solutions and instead look at the RISC-V ecosystem for hardware solutions that can take on the current industry leaders on GPU performance. The RISC-V RVA23 that was officially ratified in October 2024 is now essentially on-par with the Intel and ARM CPUs and has the need instruction sets to perform AI/ML and related math. This is a major development.

I am sold on the upward trajectory of RISC-V. The entire Instruction Set Architecture (ISA) is on a creative commons license and with RVA-23 ratified, the majority of the jigsaw pieces are now in place to grow an ecosystem from the distant edge to the central core data centres and everything in between.

Would the RISC-V CPU-GPU be the standard by 2028? I think so. We have to.

I hope this has inspired you to want to understand more about CPUs. I invite you to spend some time at https://cpu.land/ to learn all that you can about CPUs.CPU.land is built by the Hack Club that comprises a global alliance of high school students!